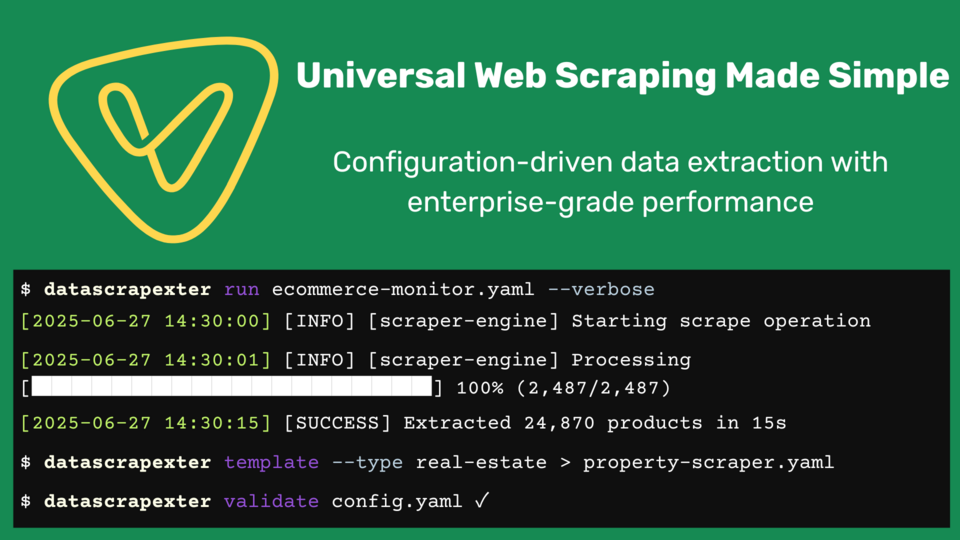

DataScrapexter

Professional Data Scraper

Brief Overview

DataScrapexter is a high-performance, Go-based web scraping platform designed to extract data from any website while overcoming sophisticated anti-scraping measures. Built on Go 1.24+ with a robust tech stack (Colly, Goquery, chromedp), it offers four packages tailored to diverse needs:

- Basic Package: Affordable, open-source solution for simple static websites, ideal for hobbyists and small-scale projects.

- Standard Package: Enhanced features like JavaScript rendering and proxy rotation for small to medium businesses tackling dynamic sites.

- Premium Package: Production-ready with advanced anti-detection, scalability, and monitoring for professional users and enterprises.

- Advanced Package: Enterprise-grade platform with AI-driven adaptation, compliance, and custom integrations for complex, large-scale operations.

Additional options include custom feature development, consulting, and premium support for tailored solutions.

Note: Pricing details for premium packages and custom features are available at https://www.fiverr.com/pere_val/develop-custom-web-scraping-solutions-for-data-extraction.

Detailed Overview

Product Description

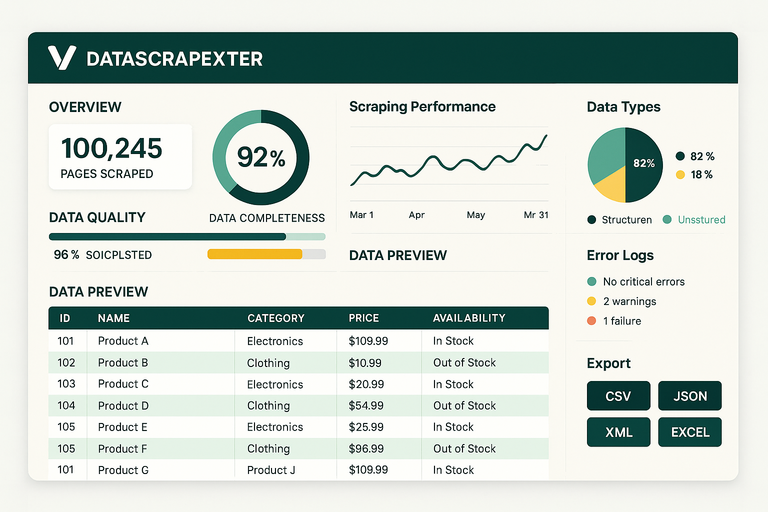

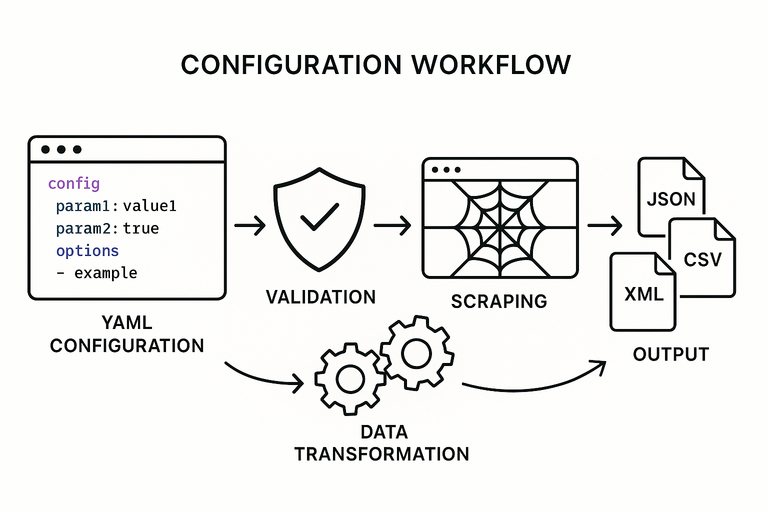

DataScrapexter is a modular, scalable web scraping solution built on Go 1.21+, designed to handle diverse websites—from static HTML to JavaScript-heavy single-page applications (SPAs)—while evading advanced anti-scraping protections. It combines configuration-driven operation (via YAML/JSON with Viper) with sophisticated anti-detection mechanisms (chromedp, Rod, 2Captcha) and a robust data processing pipeline (GORM, Kafka). The platform supports multi-format outputs (JSON, CSV, Excel, databases) and integrates with cloud services (AWS, Google Cloud) for storage and analytics, ensuring compliance with GDPR/CCPA through built-in features like robots.txt respect and audit logging.

Package Details

1. Basic Package (MVP, v0.1.0)

Target Audience: Hobbyists, individual developers, small startups, and data analysts.

Key Features:

- Core Scraping: HTTP client (net/http) with Goquery for CSS selector-based data extraction.

- Anti-Detection: Basic User-Agent rotation (50+ browser signatures), fixed rate limiting (1-5 second delays), and manual HTTP proxy support.

- Configuration: YAML-based site definitions managed by Viper.

- Output: Exports data to JSON and CSV formats.

- CLI: Cobra-based command-line interface for user-friendly operation.

- Logging: Structured logging with logrus for debugging and monitoring.

Use Case Example: A freelance data analyst needs to extract product listings from 10 static e-commerce sites for market research. They create a YAML configuration to target product titles and prices:

name: "ecommerce_site"

base_url: "https://example-shop.com"

fields:

- name: "title"

selector: "h2.product-title"

type: "text"

- name: "price"

selector: ".price"

type: "text"

rate_limit: 2s

CLI Example:

# Run the scraper with the configuration file

datascrapexter scrape --config configs/ecommerce_site.yaml --output products.json

# View help for the scrape command

datascrapexter scrape --help

# Output:

# Usage:

# datascrapexter scrape [flags]

# Flags:

# -c, --config string Path to the configuration file (required)

# -o, --output string Output file path (JSON or CSV)

# -h, --help Help for scrape

The scraper processes 100+ pages reliably, exporting clean JSON data to products.json for analysis in tools like Python or Excel.

Limitations:

- No JavaScript rendering support.

- Manual proxy configuration required.

- Limited error recovery mechanisms.

- No distributed processing capabilities.

Success Metrics:

- Successfully scrape 10+ static websites.

- Process 100+ pages without errors.

- Achieve community adoption (target: 1,000+ GitHub stars).

2. Standard Package (v0.5.0)

Target Audience: Small to medium businesses, SaaS startups, and professional data teams.

Key Features (extends Basic):

- JavaScript Rendering: chromedp integration for handling React, Vue, Angular sites, AJAX requests, and infinite scroll.

- Advanced Anti-Detection: Automatic proxy rotation with health monitoring, basic browser fingerprint randomization, and CAPTCHA detection with manual intervention alerts.

- Data Processing: Text cleaning, field validation, deduplication, and database support (SQLite, PostgreSQL).

- Configuration: Reusable template system, environment variable-based secret management, and hot reloading for configuration updates without restarts.

- Browser Pool: Managed Chrome instances for concurrent scraping tasks.

Use Case Example: A SaaS company monitors competitor pricing on a JavaScript-heavy e-commerce platform. They use a headless browser to render dynamic content:

name: "competitor_site"

base_url: "https://competitor.com"

browser:

enabled: true

headless: true

timeout: 30s

fields:

- name: "product"

selector: ".product-name"

type: "text"

- name: "price"

selector: ".dynamic-price"

type: "float"

proxy:

enabled: true

rotation_strategy: "random"

CLI Example:

# Run the scraper with proxy rotation and database output

datascrapexter scrape --config configs/competitor_site.yaml --output postgres://user:pass@localhost:5432/dbname --proxy-list proxies.txt

# Check scraper status

datascrapexter status --job-id competitor_site_20250624

# Output:

# Job ID: competitor_site_20250624

# Status: Running

# Pages Processed: 750/1000

# Errors: 2 (retrying)

# Estimated Completion: 10m remaining

The scraper handles 1,000+ pages, rotates proxies automatically, and stores validated data in PostgreSQL for integration with real-time pricing dashboards.

Success Metrics:

- Scrape 1,000+ dynamic pages reliably.

- Support integration with 5+ proxy providers.

- Attract 500+ active users and initial paying customers.

Limitations:

- Requires manual CAPTCHA solving.

- Limited scalability for massive datasets.

- Lacks enterprise-grade monitoring and analytics.

3. Premium Package (v1.0.0)

Target Audience: Professional services, mid-size companies, and enterprises.

Key Features (extends Standard):

- Advanced Anti-Detection: Integration with 2Captcha/Anti-Captcha for automated CAPTCHA solving, canvas/WebGL spoofing, human-like behavior simulation (mouse movements, typing patterns), and TLS fingerprinting (JA3/JA4 randomization).

- Monitoring & Observability: Prometheus metrics collection, pprof performance profiling, real-time health monitoring, and detailed error analytics.

- Scalability: Distributed processing with Redis coordination, configurable worker pools, priority-based task scheduling, and auto-scaling capabilities.

- Enterprise Features: Web-based dashboard for configuration and monitoring, RESTful API for programmatic control, role-based access control (RBAC), and comprehensive audit logging.

- Proxy Support: Premium residential proxy integrations (e.g., Bright Data, Oxylabs) for geo-targeted scraping.

Use Case Example: A market research firm extracts 10,000+ pages hourly from news websites protected by advanced anti-bot systems. They use stealth browsers and residential proxies:

type ProductionScraper struct {

Engine *ScrapingEngine

Monitor *MonitoringSystem

Scheduler *TaskScheduler

}

CLI Example:

# Start a distributed scraping job with monitoring

datascrapexter scrape --config configs/news_site.yaml --output kafka://localhost:9092/news-topic --workers 10 --monitor prometheus://localhost:9090

# View real-time metrics

datascrapexter metrics --job-id news_site_20250624

# Output:

# Job ID: news_site_20250624

# Pages/Second: 278

# Success Rate: 96.5%

# Active Workers: 10

# Memory Usage: 412MB

# Alerts: None

The web dashboard provides real-time success rate monitoring (95%+), while Prometheus dashboards identify performance bottlenecks, ensuring configuration updates in under 5 seconds. Data is streamed to Kafka for downstream analytics.

Success Metrics:

- Process 10,000+ pages per hour.

- Achieve 95%+ success rate on protected websites.

- Generate $100K+ annual recurring revenue (ARR) with 1,000+ paying customers.

Limitations:

- Lacks AI-driven adaptation for site layout changes.

- Limited automation for regulatory compliance reporting.

4. Advanced Package (v1.5.0)

Target Audience: Large enterprises, data-driven organizations, and technology innovators.

Key Features (extends Premium):

- AI-Powered Adaptation: Machine learning-generated extraction rules, automatic detection and adaptation to website layout changes, AI-based content classification, and data quality scoring.

- Advanced Content Processing: Natural language processing (NLP) for context-aware extraction, optical character recognition (OCR) for image-based data, and semantic relationship mapping.

- Intelligent Anti-Detection: ML-driven human behavior simulation, adaptive timing adjustments, and real-time anomaly detection to avoid bot traps.

- Advanced Analytics: Predictive analytics for scraping success, AI-driven performance tuning recommendations, cost optimization for proxy and resource usage, and trend analysis.

- Scalability: Kubernetes-based auto-scaling, multi-node coordination with Redis Streams, and priority-based task queues.

Use Case Example: A global retailer extracts product reviews from 100+ e-commerce platforms with frequent layout changes. The Advanced package leverages AI to auto-generate extraction rules:

type AIEnhancedScraper struct {

MLEngine *MachineLearningEngine

Classifier *ContentClassifier

}

CLI Example:

# Run an AI-enhanced scraping job with adaptive rules

datascrapexter scrape --config configs/reviews.yaml --output bigquery://project:dataset.reviews --ai-adapt --workers 20

# Train ML model for new site

datascrapexter train --config configs/reviews.yaml --samples samples/reviews.json

# Output:

# Training Complete

# Model ID: reviews_20250624

# Accuracy: 99.2%

# Adaptation Time: 18h

# Ready for Deployment: Yes

The system adapts to layout changes within 24 hours, achieves 99% accuracy in classifying review sentiment, and optimizes proxy usage to reduce costs by 50%. Data is enriched with NLP-derived insights and stored in Google BigQuery for analysis.

Success Metrics:

- Reduce manual configuration effort by 90%.

- Achieve 99% accuracy in content classification.

- Generate $1M+ ARR with 5,000+ enterprise users.

Limitations:

- Higher computational resource requirements.

- Complex setup may require technical expertise.

5. Advanced - Custom Features (v2.0.0)

Target Audience: Enterprises with unique requirements, system integrators, and strategic accounts.

Custom Options:

- Bespoke Anti-Detection: Custom fingerprinting strategies and proxy configurations for specific target sites.

- Custom Integrations: Seamless connectivity with CRM, BI tools, or proprietary systems via REST/GraphQL APIs or Kafka streams.

- Compliance Automation: Tailored GDPR/CCPA compliance workflows, including automated data anonymization and reporting.

- Consulting Services: Expert-led configuration, performance optimization, and staff training.

- Private Deployments: On-premises or virtual private cloud (VPC) setups with zero-trust security architecture.

Use Case Example: A Fortune 500 company requires a private scraper for aggregating data from 1,000+ intranet sites. The Advanced package delivers a custom Kubernetes deployment with single sign-on (SSO) integration and GDPR-compliant audit logging:

apiVersion: apps/v1

kind: Deployment

metadata:

name: custom-scraper

spec:

replicas: 5

template:

spec:

containers:

- name: scraper

image: custom-scraper:latest

env:

- name: VAULT_ADDR

value: "https://vault.company.com"

CLI Example:

# Deploy custom scraper with private configuration

datascrapexter deploy --config configs/intranet.yaml --k8s-namespace scraper-prod --vault-secret vault://secret/scraper

# Monitor deployment health

datascrapexter health --namespace scraper-prod

# Output:

# Deployment: custom-scraper

# Status: Healthy

# Replicas: 5

# CPU Usage: 1.2 cores

# Memory Usage: 2.3Gi

# Alerts: None

Consulting services optimize the scraper for sub-second API response times, and training equips the team to manage configurations independently.

Success Metrics:

- Support 1,000+ concurrent enterprise users.

- Achieve SOC 2 Type II certification.

- Generate $10M+ ARR with 100+ enterprise customers.

Additional Options

- Premium Support: 24/7 dedicated support with service-level agreements (SLAs) and account management.

- Configuration Marketplace: Community-driven templates for rapid deployment across popular websites.

- Training Platform: Interactive tutorials, certifications, and best practices for onboarding teams.

- Professional Services: End-to-end setup, performance tuning, and ongoing maintenance by DataScrapexter experts.

Go-to-Market Strategy

- Basic/Standard Packages: Open-source core with premium features, targeting developers and SMBs through GitHub, Go package registries, and community forums.

- Premium/Advanced Packages: Tiered SaaS model with usage-based billing, marketed via direct sales, partner channels, and industry conferences.

- Advanced - Custom Features: Enterprise licensing with custom terms, delivered through strategic partnerships and dedicated enterprise sales teams.

Why Choose DataScrapexter?

- Performance: Go’s lightweight goroutines enable 10,000+ pages/hour with <512MB memory per instance.

- Evasion: Advanced anti-detection (chromedp, Rod, 2Captcha) ensures 95%+ success rates on protected sites.

- Flexibility: YAML/JSON configuration supports any website type, from static to SPA, with multi-format outputs.

- Scalability: Kubernetes-ready architecture with auto-scaling and distributed processing for massive workloads.

- Compliance: Built-in GDPR/CCPA compliance, robots.txt respect, and audit logging ensure ethical scraping.

DataScrapexter empowers users to extract web data efficiently, ethically, and at scale, offering tailored packages for every user—from hobbyists to global enterprises. Its Go-based architecture, combined with AI-driven innovation and enterprise-grade features, positions it as a leader in the web scraping industry.